Have you ever wondered if the product or feature you're working on genuinely matters to your customers? How do you know the thing you’re working on is important? Is it because customers are asking for it? Or because you thought it would be cool?

Making Things

I was skimming through Escaping the Build Trap by Melissa Perri this weekend. I read it for the first time about a year ago, and wanted a mental refresh after writing last week’s post. On the surface, it’s another treatise on how teams should focus on outcomes over outputs, but dives deeply (and effectively) into customer-centric software development. It’s a head-nodder for me all the way through, as the concepts in the book align a lot with my approach to delivering software. It’s a guide for organizations looking to shift from a feature-focused mindset to one that is customer-centered and outcome-driven, and. how to build products that truly meet user needs and contribute to the success of the business.

Alignment and Autonomy

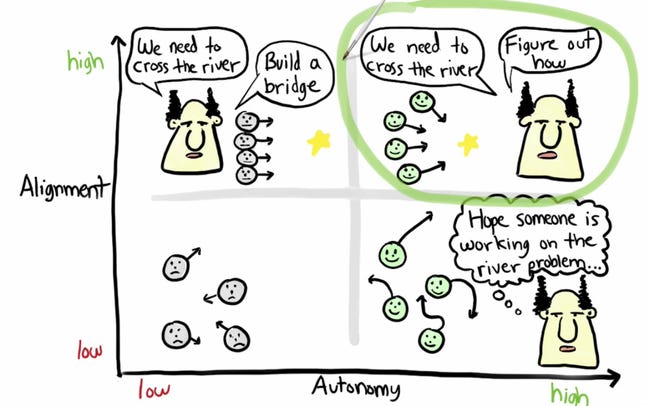

Melissa points out that organizations need to align on changing this mindset. Many orgs (and org leaders) want to see things delivered - and too often, they want to tell you what the things is that should be delivered rather than let teams focus on solving problems. Remember, your customers don’t want software, they want their problem solved.

But it’s easier to count widgets built over outcomes or behaviour change, and often, experienced leaders can feel like they’re the ones with the best answers purely because of their position in the org. I’ve mentioned a term coined by Ronny Kohavi before - the HiPPO (highest paid person’s opinion) that often applies here.

Don’t feed the HiPPO.

All this reminds me of this drawing that’s been floating around the internet for years.

Ideally leaders should help us focus on the problem we need to solve, but even if/when they don’t, we can still focus on solving problems.

The Balance

How can you balance focusing on outcomes when the pointy-hairs just want bridges built? The internet is full of people complaining about how they can’t do what they want because management wants something else. My advice - as it has been for nearly 30 years is simply to find balance between what people want vs what they need.

On my very first day at Microsoft, Doug - my manager - handed me a list of test cases printed on an excel sheet. I had done a ton of test automation at my previous job, and was a big user of the beta of Microsoft Test (later became Visual Test). In the days before I started, I re-read the docs. I was nervous, as this was Microsoft, and I thought I’d be expected to crank out automation at billg levels.

I glanced at the list and asked, “when do you need these automated?” Without a pause, Doug said, “Oh no - we don’t have time to automate these, you need to run them every day.”

This was a Monday - they were all automated by Thursday, and I used my extra time to clean things up, add new test cases, and help Doug and the rest of the team get a lot more done. Doug wanted those test cases run every day (we got a new build every day), but what he needed was to make sure the networking features exposed in the UI continued to work as expected.

It’s balance.

The Skill and HDD

In the LinkedIn thread for my last post, Wayne Roseberry wrote,

Getting good customer feedback is a real skill.

Which is short, to the point, and spot on.

Instead of feeding the HiPPO, let’s feed a hypothesis. Here’s an approach that’s worked for me, and may work for you - Hypothesis Driven Development (I too, amd tired of the *DDs, so feel free to help me find a better name).

Let’s say we’re building a web store that sells dog toys. The HiPPO approaches you and says that your team needs to build a banner on the front page that asks people to sign up for your mailing list. In some babbling conversation, you take a few notes on things like “not enough people on our mailing list”, and “email is a great way to move more product”. You nod your head and get to work…but not on the banner.

The first thing you should do before delivering any new feature is to develop a hypothesis (or two). We want outcome X to happen if we build Y. In this case, our hypthesis could be:

Increase mailing list size by 50% within two weeks of launching the banner

or40% of all site visiters choose to sign up for the mailing list

Think of the outcomes (or customer behaviors that should change), then ensure you have the measurements in place. This isn’t anwhere near a significant delay in getting to the work - it’s just a quick pause for the team to discuss what should change when the feature is delivered.

But don’t write the banner just yet…

First answer two questions.

What action will we take if our hypothesis is successful?

What action will we take if our hypothesis is unsuccessful?

This brief pause is there to make sure your hypothesis makes sense. In this case, let’s say that the banner helps us double our mailing list size. If that happens, we’re keeping the banner in place, but then may go on to measuring how many sales we make as a result of the email campaigns. If the signup is negligible, it can help beforehand to think of whether we want to try other ways to get people signed up for email, or whether we want to try a different approach to increase sales.

And yes, then you can build the banner.

Start with a hypothesis, answer two questions, and then get to work. Deliver the thing, measure the outcome.

This approach is heavily borrowed from Eric Ries and his concept of Build→Measure→Learn. Build, measure, and learn yourself to figure out what works for you to measure the outcomes you want to change.

This brief exercise is, in my experience, a wonderful way to discover whether the thing you’re working on matters to customers. It’s one way to leverage customer metrics to make sure you’re focusing on the right things.

Outputs AND Outcomes

Let’s give the pointy hairs some credit. It makes sense that they want to see projects completed. Somewhere in the translation layer, they know that completing that project is going to change customer behavior. It’s easy enough as a development team to finish the thing AND measure the outcome. In my experience, a product-led / customer-centric / outcome driven approach eventually becomes the clear winner in mature software teams.

Build the thing and measure the outcome. Show both.

I love the conclusion - Finish the thing and measure the outcome. Too often, measuring the outcome is not one of the given requirements. Whoever gave the requirement knows what they're talking about. They don't think they need it to be measured.

In a story from my Microsoft days, a Program Manager requested a new feature to improve performance (the HiPPO in this story). I did the analysis of use cases to argue that in most cases the feature would either reduce performance or have no effect. There were some corner cases where it would help. The HiPPO said those corner cases were important but agreed that the feature should be off by default and only turned on by those users who really needed it.

To have the conclusion to this story, I wish I had also implemented a way to measure how often the feature was used. That feedback would have been concrete information we all would have learned from.